Problems can arise when we misunderstand the rhetorical intentions surrounding informally stated hypotheses, which are certainly not limited to scientific endeavors. A stated hypothesis is a very strange kind of propositional utterance. When we state a hypothesis, we don't know whether it's true but we make the statement as if it were anyway - and we don't consider it to be lying or equivocating either. Why not? It has something to do with your audience knowing why you're uttering a proposition of uncertain truth value, which is exactly why if they don't know your proposition is a hypothesis, there can be problems. For example, Edward Sapir explicitly advanced the Penutian language family as a hypothesis to be investigated, and the theory was quickly adopted as gospel by the linguistic community, much to his distress. Fortunately it has largely been supported by data. It doesn't always work this way, in science or everyday conversation.

Since most languages mark questions and subjunctives explicitly, a fanciful solution would be a hypothesis particle. A simple phoneme would follow any informal statement of a hypothesis (-ba would work), and it would mean this: "I make no assertion about the truth of the proposition preceding this particle, but I want to learn the truth of this proposition, and I invite close scrutiny and criticism of this proposition in service of this goal."

But reflect further about rhetorical intention and truth value in utterances. Our classification of the rhetorical intentions is poor, since we don't recognize classes of utterances which are quite often explicitly encoded. A simple epistemological model of language is that all contentful utterances are commands, either directly to commit an action or to react to provided information, even if that's just for the listener to update his/her model of the world. As such we would expect most utterances will contain signals for rhetorical intention above and beyond the content of the sentence; there is the proposition being uttered, and the intention the utterer has of how the audience should react. Analytic philosophers attempted to approach natural language propositionally although their conclusions were sometimes provincial, hobbled as they sometimes were by an impoverished knowledge of the variety of language structures that existed outside Western Indo-European. Here is a phylogeny of coherent utterances which includes rhetorical intention.

1) COMMAND: "Get out of my house."

Extra-contential rhetorical tag: none.

1.1) Commands are the basic form of language and it is therefore not surprising that the command forms of verbs are usually morphosyntactically as, or more simple than, even the infinitive.

2) CONTENTFUL EXCLAMATION: "A blue hummingbird!"

Extra-contential rhetorical tag: "Recognize this object/event I have verbally pointed to."

2.1) Some languages (e.g. Tagalog, Washoe) do encode this intention explicitly and have focus markers which explicitly declare what the speaker wishes the listener to focus on. These markers occur throughout sentence structures and are not limited to noun-phrase exclamations like the one above.

2.2) Dependent phrase structure is always just another example of recursive phrase structure. That said, shorthand often evolves for parsimony (i.e., "There is a red book on the table" is really just shorthand for, and content-wise exactly the same as, "There is a book that is red on the table".) In languages with copulae like English, superficially there seems to be a distinction between main and dependent phrases only because of phonetic realization (or lack thereof) of recursion, but languages that lack copulae illustrate the principle more clearly.

2.3) There is also an argument that constructions like "there is" or intransitive words equivalent to "exist" are really just reflexive copulae that tie off structural loose ends. Therefore the statement above is equivalent to the proposotion "There is a hummingbird that is blue!" Potential investigation: ergative/absolutive languages with reflexive morphemes are known not to "cross systems", e.g. East Greenlandic, in which using ergative blocks use of the reflexive marker; you can only use one system at a time. So how do the reflexive copular constructions behave in ergative/absolutive languages that have both copulae and reflexivity?

3) DECLARATION: "I am going running at 3pm."

Extra-contential rhetorical tag: "I want you to accept as true the meaning of this proposition, and update your model of the world accordingly."

3.1) Although seemingly the most basic form of utterance, declarative propositions are not even close to the entirety of contentful utterances we make. Still they are zero-grade in all languages that do mark rhetorical intention.

4) YES/NO QUESTION: "Is he very tall?"

Extra-contential rhetorical tag: "I want you to reformulate this utterance as a proposition and then tell me your evaluation of its truth value."

4.1) Questions are usually marked, either by word-order changes, explicit particles (like Japanese -ka) or tone. English has few minimal pairs where tone makes a difference (e.g. permit) and such pairs are related, unlike full tone languages. Still, if the concept of minimal pairs is extended to rhetorical intention, tone is indeed explicitly encoded and certainly distinguishes minimal pairs. ("He is running for governor." "He is running for governor?" These sentences mean different things.)

4.2) Yes/no questions are therefore actually different kinds of utterances than those containing interrogative pronouns. In fact some languages do mark them differently. Latin marked verbs with the suffix -ne only in questions that did not contains interrogative pronouns.

4.3) In all cases that I know of, the verb dominates other parts of speech in taking on question particle - that is, if there's a verb in a sentence and a language has a question particle, the particle attaches to the verb. (Case in point, in Japanese, -ka typically goes on the verb but if a single-noun utterance requesting clarification, the question particle can go on the noun; "He's working in the city," one speaker says, and the other says "Takamatsu-ka,", i.e. "In Takamatsu?"

I have argued previously that verbs and adjectives are both first-order modifiers, but that some first-order modifiers can modify two nouns simultaneously (these are called transitive verbs.) In this view, nouns alone cannot create a proposition since there is no relationship stated between them without verbs. Therefore, it makes sense that the rhetorical marker would be placed on the verb that changes the utterance from a list into a proposition.

5) INTERROGATIVE-PRONOUN-CONTAINING QUESTION: "What is the best restaurant in Portland?"

Extra-contential rhetorical tag: "This is a proposition whose truth value cannot be evaluated since I have deliberately used a placeholder ('what'). I want listeners to reformulate the statement as a proposition but include information that can be plugged into the placeholder slot in such a way as to make the proposition true."

5.1) Interrogative-pronoun containing questions are also usually marked in some way (by word-order, tone, and/or explicit morphemes.)

5.2) Languages often have multiple interrogative pronouns for different types of nominal information, but never to my knowledge are there dedicated adjectival, verbal, or other interrogative words. Interrogative pronouns can be pressed into service for one-off service as verbs and even productively undergo morphosyntactic operations: (Imagine a woman has just been told her twelve year-old son was seen driving to school. "He was what-ing to school?" Nonetheless these kinds of operations on interrogative pronouns are never formalized.)

6) CONTINGENT DECLARATION: "If it rains today, you're on your own."

Extra-contential rhetorical tag: "I have explicitly marked off a proposition whose truth value is influenced by other propositions stated in close proximity and whose truth I may not be certain of, or by the way I obtained the information."

6.1) There are two sub-structures here: one is the typical if-then statement formulation for subordinate clauses we normally think of, but there is also the case of evidential markers most famous among Tupi-Guarani languages. Both systems are ways of explicitly marking the truth-weighting that the listener should give to the proposition so marked.

Though it doesn't merit a separate entry here, it's interesting that hypotheses aren't exactly questions, but they aren't exactly subordinate clauses either (though a hypothesis can be stated as both.) It's my suspicion that humans not engaged in research do not engage in extended hypotheticals - the propositions they are unsure about tend to be simple enough that their hypotheses are all contained in single clauses delineated by if-then markers. For most humans, thoughts complicated enough to require more than one sentence and which are of uncertain truth are merely deception, not hypotheses to be tested.

However if English does follow my humorous suggestion to develop an explicit hypothesis particle and a seventh utterance category, then I should re-state my earlier sentence as "A simple epistemological model of language is that all contentful utterances are commands, either directly to commit an action or to react to provided information, even if that's just for the listener to update his/her model of the world-ba."

Consciousness and how it got to be that way

Sunday, December 19, 2010

The Verb Regularization Rate in English

"The half-life of an irregular verb scales as the square root of its usage frequency: a verb that is 100 times less frequent regularizes 10 times as fast." From Lieberman et al in Nature. An interesting question is to what other morphosyntactic rules this generalizes to, like noun plurals (and to what extent is it influenced by phonetic realization. My guess: not very much.) Pinker and many others knew qualitatively that the less a verb is used, the more likely it is to become regular in a given time period. Now we have the quantitative rule.

Monday, September 20, 2010

Gene Ancestry Visualization Tool?

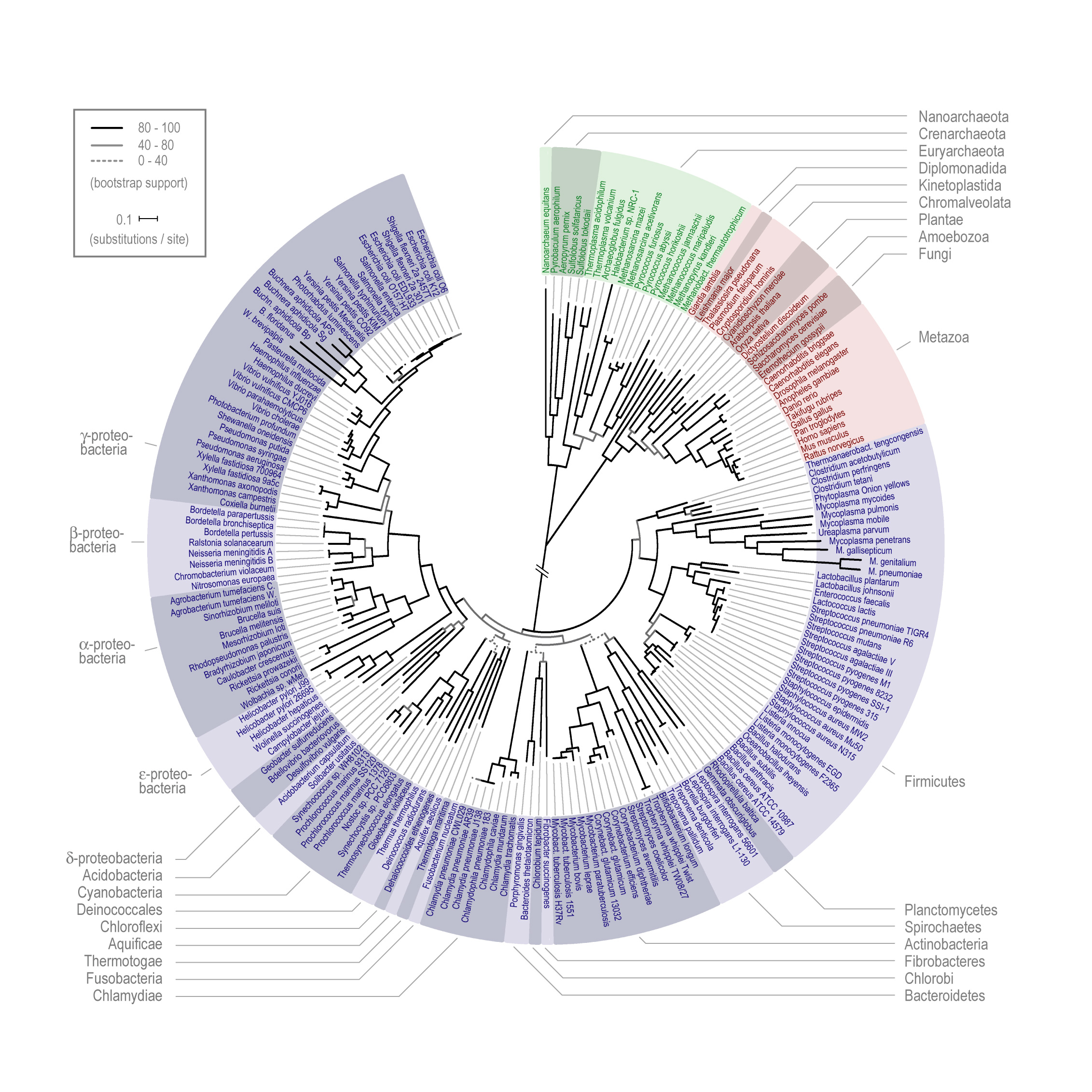

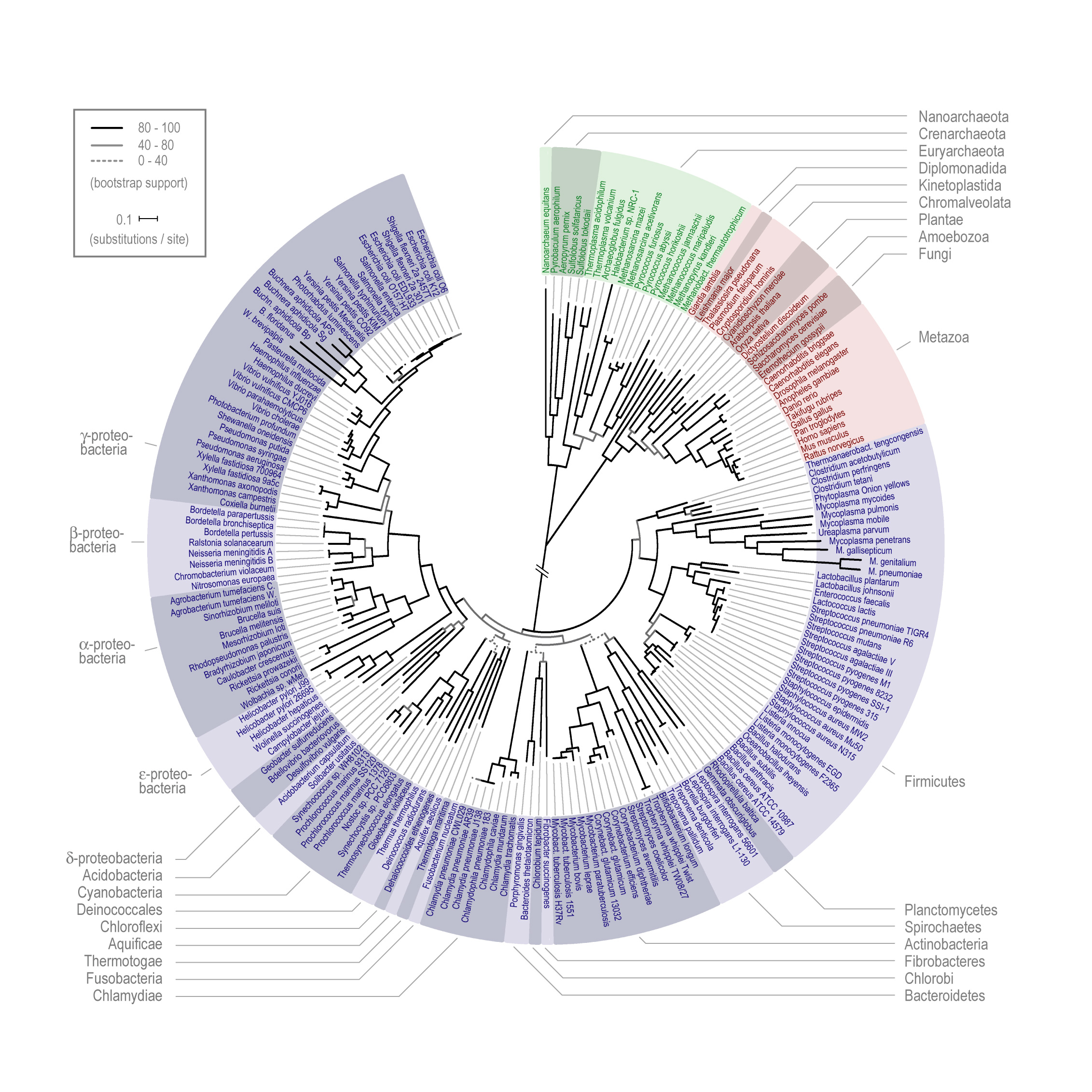

Most people are familiar with the radial-wheel format of showing the descent of species, like this one:

Image credit Bristol University Department of Chemistry

What would be really cool, and may already exist, which is why I'm posting this, is the same tool existed, except for gene ancestry. (And here is where I make obvious my ignorance of bioinformatics and the descent of genes both.) Genes can often be shown to originate by duplication events. I'm probably being naive about the extent to which the ancestry of genes results from similar events, or can be traced back to common ancestors shared with other genes in the same genome. But unless there was a lateral transfer event, genes all have to have common ancestry with other sequences in the organism, correct?

Ultimately we'd see another radial circle, except with genes radiating out from a common ancestor. The outer surface would be the human genome as it is now. You could place nested concentric circles representing previous phases of the genome (see mock-up below). Yes, I know the "surface" separating primates from non-primates (for example) doesn't represent any qualitative break, and the circle would be pretty lumpy since not all genes or gene families would branch and mutate at the same rates. (Genes I picked are illustrative only.)

Now, there are lots more genes than you could legibly read on a computer-screen-sized wheel, but this is also true for the species-descent wheel, which uses representative organisms. In my dream app, you could select certain gene families or genes with products that catalyzed certain classes or reactions or interacted with other gene products. The point of such a visualization is that you could more easily see when pathways started appearing or becoming more complex. Of course there will have been lots of genes lost in the interim, so we couldn't get a complete picture of what the genome was like at each point in the past. (Going back to the Grand-Daddy autocatalytic RNA would be cool, but unlikely.) If the gene-product features are sufficiently advanced you could even conceivably find "holes" in pathways at ancestral stages where the must have been gene products whose genes are no longer in the genome.

If there are any tools similar to this already out there, I would greatly appreciate a comment. This would be a valuable tool to investigate the evolution of pathways and systems. Especially neurons, of course.

Image credit Bristol University Department of Chemistry

What would be really cool, and may already exist, which is why I'm posting this, is the same tool existed, except for gene ancestry. (And here is where I make obvious my ignorance of bioinformatics and the descent of genes both.) Genes can often be shown to originate by duplication events. I'm probably being naive about the extent to which the ancestry of genes results from similar events, or can be traced back to common ancestors shared with other genes in the same genome. But unless there was a lateral transfer event, genes all have to have common ancestry with other sequences in the organism, correct?

Ultimately we'd see another radial circle, except with genes radiating out from a common ancestor. The outer surface would be the human genome as it is now. You could place nested concentric circles representing previous phases of the genome (see mock-up below). Yes, I know the "surface" separating primates from non-primates (for example) doesn't represent any qualitative break, and the circle would be pretty lumpy since not all genes or gene families would branch and mutate at the same rates. (Genes I picked are illustrative only.)

Now, there are lots more genes than you could legibly read on a computer-screen-sized wheel, but this is also true for the species-descent wheel, which uses representative organisms. In my dream app, you could select certain gene families or genes with products that catalyzed certain classes or reactions or interacted with other gene products. The point of such a visualization is that you could more easily see when pathways started appearing or becoming more complex. Of course there will have been lots of genes lost in the interim, so we couldn't get a complete picture of what the genome was like at each point in the past. (Going back to the Grand-Daddy autocatalytic RNA would be cool, but unlikely.) If the gene-product features are sufficiently advanced you could even conceivably find "holes" in pathways at ancestral stages where the must have been gene products whose genes are no longer in the genome.

If there are any tools similar to this already out there, I would greatly appreciate a comment. This would be a valuable tool to investigate the evolution of pathways and systems. Especially neurons, of course.

Sunday, September 19, 2010

Lewy Body Dementia and Alpha Synuclein as a Lipid Manipulator

In terms of understanding moment-to-moment awareness, cognitive hypofunction disorders with clear genetic contributions are often uninteresting. That is, the problem is usually that the wiring isn't there, or is wrong. The reason this is uninteresting is that if you're interested in what underlies moment-to-moment consciousness, you have to look for properties of the brain that change on the same time-scale, allowing our subjective awareness to represent some of the information in the outside world.

For this reason, pharmacology often presents more research opportunities than disease states, in particular NMDA antagonists and HT2A agonists. But there is a disease state which is notorious for hour-to-hour (or more) fluctuations in cognitive status, dementia with Lewy bodies. As you can see from the linked reference, it's not yet clear whether the disease is a sub-type of Alzheimers, or a distinct condition.

Lewy bodies are alpha-synuclein plus ubiquitin inclusions that appear in neurons specific parts of the brain in disease states; the ubiquitin suggests that these are clumps of protein the neuron is trying to degrade. Their presence is not necessarily indicative that the patient had any of the additional symptoms of Lewy body dementia. Clinical Lewy body dementia is associated with symptoms above and beyond what Alzheimers patients suffer. It also has significant overlap with Parkinson's; specifically, patients exhibit both the motor decline of PD as well as REM sleep disorder. Unlike Alzheimers, onset is not insidious. Unlike either Alzheimers or PD patients and most relevant here, Lewy body dementia patients usually have recurrent visual hallucinations, and are extremely sensitive to dopaminergic- and cholinergic-modifying medications.

When we think of real-time changes to nervous systems, we usually think of information being transmitted in an electrochemically-mediated way by neurotransmitter vesicle diffusion and membrane depolarization. Membrane potentials would also be dramatically and rapidly effected by changes in lipid membrane properties, so I had considered previously whether there were proteins expressed in brain that manipulated or maintained membrane lipid contents. It's interesting that alpha synuclein a) is known to be located on the cell membrane in some fraction, b) is natively unfolded in the cytosol, c) interacts with polyunsaturated fatty acids, d) interacts with membranes correlating with serine phosphyorylation and e) still hasn't been assigned a clear function.

This is why a recent Journal of Molecular Neuroscience paper by Riedel et al at the University of Oldenburg is important. Using an oligodendroglial cell line, they demonstrated the creation of a-synuclein aggregates (in vitro Lewy bodies) both in cells that had a point mutation in a-synuclein predisposing them to aggregate formation, as well as in wild-type cells. This was done by adding DHA (by the way, an omega 3 polyunsaturated fatty acid) and then hydrogen peroxide for oxidative stress. Alpha synuclein aggregates formed both in the mutant cell line as well as in the wild type, though the mutant cells' aggregates were bigger (all compared to non-treated controls).

My hypothesis is that alpha-synuclein is responsible for lipid processing of neuronal membranes to maintain electrochemical constancy, in response to physiologically rapid (minutes to hours) changes in the environment of the cell. In addition to the specific deficits in Lewy body dementia (associated with the brain region where the Lewy bodies appear), this may also explain the rapid fluctuation in cognitive status - cell membranes are unable to respond to a changing electrochemical environment because there's a problem with the protein that controls their lipid content. When alpha-synuclein catches up or the triggering physiological change (pH, solute concentration) reverses to previous levels, the cognitive deficits may disappear.

These findings, though they show an interaction, are therefore causally backwards - here, changes to lipids are initiating aggregation. It could be that once aggregation begins, alpha-synuclein function is off-line, and any new alpha-synuclein produced by the cell gets immediately caught in the tangle and can't perform. (There's evidence that there's more than normal at the membrane in disease states.) Here are future experiments, which in my quick survey of the literature I may have missed: 1) patch clamp recordings of dopamine receptors on cells in culture with loss-of-function mutations or knockouts of alpha synuclein, especially in response to differences in charge, pH, and buffer concentration (to mimic physiologic changes in extracellular fluid). 2) Measurement of individual fatty acid chains in knockout relative to control, in terms of their incorporation into cell membranes.

I'm both excited and nervous because in the coming weeks I will be interacting with patients in the clinic who have this disease, which is why I'm motivated to understand it.

For this reason, pharmacology often presents more research opportunities than disease states, in particular NMDA antagonists and HT2A agonists. But there is a disease state which is notorious for hour-to-hour (or more) fluctuations in cognitive status, dementia with Lewy bodies. As you can see from the linked reference, it's not yet clear whether the disease is a sub-type of Alzheimers, or a distinct condition.

Lewy bodies are alpha-synuclein plus ubiquitin inclusions that appear in neurons specific parts of the brain in disease states; the ubiquitin suggests that these are clumps of protein the neuron is trying to degrade. Their presence is not necessarily indicative that the patient had any of the additional symptoms of Lewy body dementia. Clinical Lewy body dementia is associated with symptoms above and beyond what Alzheimers patients suffer. It also has significant overlap with Parkinson's; specifically, patients exhibit both the motor decline of PD as well as REM sleep disorder. Unlike Alzheimers, onset is not insidious. Unlike either Alzheimers or PD patients and most relevant here, Lewy body dementia patients usually have recurrent visual hallucinations, and are extremely sensitive to dopaminergic- and cholinergic-modifying medications.

When we think of real-time changes to nervous systems, we usually think of information being transmitted in an electrochemically-mediated way by neurotransmitter vesicle diffusion and membrane depolarization. Membrane potentials would also be dramatically and rapidly effected by changes in lipid membrane properties, so I had considered previously whether there were proteins expressed in brain that manipulated or maintained membrane lipid contents. It's interesting that alpha synuclein a) is known to be located on the cell membrane in some fraction, b) is natively unfolded in the cytosol, c) interacts with polyunsaturated fatty acids, d) interacts with membranes correlating with serine phosphyorylation and e) still hasn't been assigned a clear function.

This is why a recent Journal of Molecular Neuroscience paper by Riedel et al at the University of Oldenburg is important. Using an oligodendroglial cell line, they demonstrated the creation of a-synuclein aggregates (in vitro Lewy bodies) both in cells that had a point mutation in a-synuclein predisposing them to aggregate formation, as well as in wild-type cells. This was done by adding DHA (by the way, an omega 3 polyunsaturated fatty acid) and then hydrogen peroxide for oxidative stress. Alpha synuclein aggregates formed both in the mutant cell line as well as in the wild type, though the mutant cells' aggregates were bigger (all compared to non-treated controls).

My hypothesis is that alpha-synuclein is responsible for lipid processing of neuronal membranes to maintain electrochemical constancy, in response to physiologically rapid (minutes to hours) changes in the environment of the cell. In addition to the specific deficits in Lewy body dementia (associated with the brain region where the Lewy bodies appear), this may also explain the rapid fluctuation in cognitive status - cell membranes are unable to respond to a changing electrochemical environment because there's a problem with the protein that controls their lipid content. When alpha-synuclein catches up or the triggering physiological change (pH, solute concentration) reverses to previous levels, the cognitive deficits may disappear.

These findings, though they show an interaction, are therefore causally backwards - here, changes to lipids are initiating aggregation. It could be that once aggregation begins, alpha-synuclein function is off-line, and any new alpha-synuclein produced by the cell gets immediately caught in the tangle and can't perform. (There's evidence that there's more than normal at the membrane in disease states.) Here are future experiments, which in my quick survey of the literature I may have missed: 1) patch clamp recordings of dopamine receptors on cells in culture with loss-of-function mutations or knockouts of alpha synuclein, especially in response to differences in charge, pH, and buffer concentration (to mimic physiologic changes in extracellular fluid). 2) Measurement of individual fatty acid chains in knockout relative to control, in terms of their incorporation into cell membranes.

I'm both excited and nervous because in the coming weeks I will be interacting with patients in the clinic who have this disease, which is why I'm motivated to understand it.

Saturday, August 21, 2010

Thoughts on Newcomb

I'm currently reading Robert Nozick's Socratic Puzzles. It contains two essays about Newcomb's Problem. If you've not encountered Newcomb before, a brief description follows, and if you want more, the most discussion I've seen anywhere is at Less Wrong. I can sum up this post thusly: how can Newcomb be a hard problem?

Imagine a superintelligent being (a god, or an alien grad student as Nozick imagines, or more plausibly a UCSD medical student. It's up to you.) This superintelligent being says that it can predict your actions perfectly. It shows you two boxes, Box #1 and Box #2, into which it will place money according to rules that I will shortly give. As for you, you have two options: either open both boxes and take the money from both if there is any, or open only Box #2 and take the money from just Box #2. Now here are the rules, and the kicker. Since the being can predict your actions perfectly, it does the following trick. If it predicts that you're going to take just Box #2, it will place a thousand dollars in box #1, and a million dollars in Box #2. So in this instance, you will get a million dollars, but you'll miss out on the thousand in box #1. On the other hand, if it predicts that you will take both boxes, the being will place a thousand dollars in box #1, but place nothing in Box #2. In that case, you end up with just a thousand dollars. So in other words: the being always puts a thousand dollars in Box #1, whereas in Box #2 there's either a million, or nothing.

So, now the superintelligent being has gone back to its home planet of La Jolla, and you are left wondering what to do. Assuming you want the most money possible, which option do you pick and why?

Figure 1. Decision table for Newcomb's Problem.

There's been a lot of discussion about Newcomb's Box, and not all of the responses adhere to the standard one-or-both answer. But I take the point of this particular logic-koan to be that we're to decide based on the givens of the problem which of the options we would take, so cute answers about trying to cheat, making side bets, etc. are wasting our time. If we're going to introduce those kinds of non-systematic "real world" options into this exercise, then we're going to need a lot more context than we currently have to make a decision. In fact after ten years living in Berkeley I'm surprised that I haven't yet met someone on a street corner claiming to be an alien with a million dollars for me, but if I did I would walk away and not play at all. (Come to think of it, I frequently get similar spontaneous offers of a million dollars or more in my spam folder which I ignore at my peril.)

My own answer is to take only box #2, expecting to get a million dollars. Why? Because I want a million dollars, and the superintelligent alien is apparently smart enough to know that I'll gladly cooperate and not try to make myself unpredictable (more on this in a moment). Why try to be a smart-ass about it? (It's both to your disadvantage and not even possible anyway per the terms of the problem.) The being told you where it would put the million dollars (or not) based on your actions, and it's a given in the problem that the being is perfect at predicting your actions. This is what gives the both-boxers fits. They say one-boxers are idiots because if the being got my choice wrong, it didn't put anything in box #2 because it thought I would choose both. If the being is wrong, I open only box #2, and I get zero (because the alien thought I was going to take both and least get a grand, but he was having an off day.)

I will be beating the following dead horse a lot here: the problem states you have a reliable predictor. Why does Figure 1 above even have a right-side column? If you assume the being is fallible then you're not thinking about Newcomb's problem as stated any more: you're ascribing properties to the being that either conflict with what is given in the problem, or your're making stuff up. (Maybe the alien is fallible and copper and zinc are toxic to it! That way it won't predict in time that I'm going to kill it by throwing my spare pennies and brass keys at it, and then I can get the full amount from both boxes! Sucker. Ridiculous? No more than worrying about the given perfect predictor's not being perfect.)

Figure 2. Correction to Figure 1. This figure is the actual real table for Newcomb's Problem. Figure 1 is somebody else-not-Newcomb's problem that features fallible aliens.

Complaints about the logic of the Box #2-only response (which is the majority's response, if the ones Nozick cites in one of his essays are representative) typically focus on two things. One, that we're assuming reverse causality, that we must think our choice of the boxes will make there be a million dollars in it; and two, that it suggests we don't have free will. I dismiss the second objection out of hand because the whole point of the problem is that the being is a reliable predictor of human behavior - for that one aspect of your behavior, in this problem, no, you don't have free will. Look: we already accepted a being with near-perfect predictive powers. Without that, then the problem changes and we have to guess how likely the being is to get it right. But as long as we have Mr./Ms. Perfect Predictor, then the nature or mechanism is unimportant. You can justify how it accomplishes this however you like (we don't have free will in this respect, or the alien can travel through time) but the point is, any cleverness or strategy or philosophizing you do has already been taken into account by the alien.

But things can be predicted in our world, including human behavior, and for some reason this doesn't seem to evince outcries about undermining the concept of free will. Like it or not, other humans predict things about you all the time that you think you'd have some conscious control over - whether you'll quit smoking, your credit score, your mortality - and across the population, these predictions are quite robust. They don't always have the individual exactitude that our alien friend does of course. But at the very least you must concede that if our alien friend is even as smart as humans, after playing this game multiple times with us, its ability to predict which box you take would be greater than random chance, and you would get some information about which box you should pick based on this. Being completely honest, I think a lot of the resistance to one-boxing comes from the repugnance with which some people regard the idea that their behavior is extremely predictable. (Hey! News flash: it is.) Nozick even offers additional information in his example by saying that you've seen friends and colleagues play the same game, and the being predicted their choice reliably each time. Come on Plato, do you want a million dollars or not? Absolute no-brainer!

The first objection (regarding self-referential decision-making) is slightly more fertile ground for argument and it's the one to which Nozick devotes the most time. The idea is that you're engaging in circular logic: I'm deciding to one-box, therefore the being knew I would one-box, therefore I should decide to one-box. (Again: what's the whole point of the exercise? That whatever decision you're about to make, the being knew you would do it, including all the mental gyrations you're going through to get to your answer.) Nozick gives the example of a person who doesn't know whether his father is Person A or Person B. Person A was a university scientist and died of a painful disease in mid-life which would certainly be passed onto all offspring; children of person A would be expected to display an aptitude for technical subjects. Person B was an athlete, and likewise his children would be expected to display an athletic character. So the troubled young man is deciding on a career, noting that he has excelled equally in both baseball and engineering. "I certainly wouldn't want to have a painful genetic disease. Therefore, I'll choose a career in baseball. Since I've chosen a career in baseball, that means my true prowess is in athletics and therefore, B was my father, and I won't get a genetic disease. Phew!"

Yes, that would be a ridiculous decision process. The difference between the two is this: the category the decider is in the whole time is defined in Newcomb as definitely affecting the decision, whereas in Nozick's parallel, it does not (he could've gone either way.) Whatever you decide in Newcomb, the alien knew you would go through your whole sequence of contortions, and you were in that category all the while. Whether such a deterministic category is meaningful is a different and probably more interesting question than Newcomb as-is. Here's another example: you're in a national park, following a marked trail. You get so far along the trail until you come to a frighteningly steep rock face with only a single cable hammered into it. You reason, "I am about to proceed up these cables. If I'm about to do it, it's only because my action was anticipated by the national park people who design the map and trails and they can predict my actions as a reasonably fit and sensible hiker, and furthermore they put these cables here; they're not in the business of encouraging people to do foolishly dangerous things. Therefore, because I am going to do it, it is safe and I should do it." (Any reader who's ever braved the cables on Half Dome in Yosemite by him or herself without knowing ahead of time what they were getting into has had this exact experience.) This replicates the decision process relating to the for-some-reason mysterious perfect predictor: "I am about to open Box #2 only. If I'm about to open it, the superintelligent being would have put a million dollars in it. Therefore I should open Box #2 only." In fact, all the time we go through such circular reasoning processes as they relate to other human beings who are predicting are actions either in general or specifically for us: I am going to do A, and A wouldn't be available unless other agents who can predict my actions reasonably well knew I would come along and do A, therefore I should do A. This still may be an epistemological mess (something I'm not going to debate here) but the fact is that we use this kind of reasoning constantly, living in a world shaped in the to-us most salient ways by other agents who can predict our actions.

Incidentally, I intentionally used the example of the national park because that we use that kind of reasoning becomes obvious when you're trying to decide whether to climb something or undertake an otherwise risky proposition in a wilderness area, rather than on developed trails with markers; you become acutely aware that this circular justification heuristic based on other agents predicting your actions is suddenly unavailable, and then when it's available again (five miles further on, you run across an old trail) the arrangement seems quite obvious.

As a final note, as in other games (like Prisoner's Dilemma) the payouts can be critically important to how we choose. As the problem is traditionally stated (always a thousand in box #1, either zero or a million in box #2), it actually makes the decision quite easy for us, even if we're worried about the fallibility of our brilliant alien benefactor (which again, if we are, then what's the point of this whole exercise!?!?). Making a decision that throws away a thousand for a crack at a million is not for most humans in Western democracies a bad deal. (If someone could show me a business plan that had a 50% chance of turning a thousand bucks into a million within the few minutes that the Newcomb problem could presumably take place in, I'd be stupid not to do it!) On the other hand if I lived in the developing world and made $50 a month and had six kids to feed, I might think harder about this. (This is the St. Petersburg lottery problem, in which the expected utility of the same payout differs between agents based on their own context, and can be applied to other problems as well.) Similarly if it were five hundred thousand in Box #1 and a million in Box #2, things would be more interesting, for my own expected utility at least. Opening a box expecting a million and getting nothing doesn't hurt so much if you would have only got a thousand by playing it safe and opening both; it would be pretty bad if you'd expected a million and got nothing but could still have half a million if you'd played it safe. (For me. Bill Gates would probably shrug.)

Overall, the whole exercise of Newcomb's Box, as given, seems to me uninteresting and obvious. But enough smart people have gone on debating it for long enough that I must be some kind of philistine who's missing something about it. Nonetheless the arguments I've seen so far are not compelling; feel free to share more.

Imagine a superintelligent being (a god, or an alien grad student as Nozick imagines, or more plausibly a UCSD medical student. It's up to you.) This superintelligent being says that it can predict your actions perfectly. It shows you two boxes, Box #1 and Box #2, into which it will place money according to rules that I will shortly give. As for you, you have two options: either open both boxes and take the money from both if there is any, or open only Box #2 and take the money from just Box #2. Now here are the rules, and the kicker. Since the being can predict your actions perfectly, it does the following trick. If it predicts that you're going to take just Box #2, it will place a thousand dollars in box #1, and a million dollars in Box #2. So in this instance, you will get a million dollars, but you'll miss out on the thousand in box #1. On the other hand, if it predicts that you will take both boxes, the being will place a thousand dollars in box #1, but place nothing in Box #2. In that case, you end up with just a thousand dollars. So in other words: the being always puts a thousand dollars in Box #1, whereas in Box #2 there's either a million, or nothing.

So, now the superintelligent being has gone back to its home planet of La Jolla, and you are left wondering what to do. Assuming you want the most money possible, which option do you pick and why?

Figure 1. Decision table for Newcomb's Problem.

There's been a lot of discussion about Newcomb's Box, and not all of the responses adhere to the standard one-or-both answer. But I take the point of this particular logic-koan to be that we're to decide based on the givens of the problem which of the options we would take, so cute answers about trying to cheat, making side bets, etc. are wasting our time. If we're going to introduce those kinds of non-systematic "real world" options into this exercise, then we're going to need a lot more context than we currently have to make a decision. In fact after ten years living in Berkeley I'm surprised that I haven't yet met someone on a street corner claiming to be an alien with a million dollars for me, but if I did I would walk away and not play at all. (Come to think of it, I frequently get similar spontaneous offers of a million dollars or more in my spam folder which I ignore at my peril.)

My own answer is to take only box #2, expecting to get a million dollars. Why? Because I want a million dollars, and the superintelligent alien is apparently smart enough to know that I'll gladly cooperate and not try to make myself unpredictable (more on this in a moment). Why try to be a smart-ass about it? (It's both to your disadvantage and not even possible anyway per the terms of the problem.) The being told you where it would put the million dollars (or not) based on your actions, and it's a given in the problem that the being is perfect at predicting your actions. This is what gives the both-boxers fits. They say one-boxers are idiots because if the being got my choice wrong, it didn't put anything in box #2 because it thought I would choose both. If the being is wrong, I open only box #2, and I get zero (because the alien thought I was going to take both and least get a grand, but he was having an off day.)

I will be beating the following dead horse a lot here: the problem states you have a reliable predictor. Why does Figure 1 above even have a right-side column? If you assume the being is fallible then you're not thinking about Newcomb's problem as stated any more: you're ascribing properties to the being that either conflict with what is given in the problem, or your're making stuff up. (Maybe the alien is fallible and copper and zinc are toxic to it! That way it won't predict in time that I'm going to kill it by throwing my spare pennies and brass keys at it, and then I can get the full amount from both boxes! Sucker. Ridiculous? No more than worrying about the given perfect predictor's not being perfect.)

Figure 2. Correction to Figure 1. This figure is the actual real table for Newcomb's Problem. Figure 1 is somebody else-not-Newcomb's problem that features fallible aliens.

Complaints about the logic of the Box #2-only response (which is the majority's response, if the ones Nozick cites in one of his essays are representative) typically focus on two things. One, that we're assuming reverse causality, that we must think our choice of the boxes will make there be a million dollars in it; and two, that it suggests we don't have free will. I dismiss the second objection out of hand because the whole point of the problem is that the being is a reliable predictor of human behavior - for that one aspect of your behavior, in this problem, no, you don't have free will. Look: we already accepted a being with near-perfect predictive powers. Without that, then the problem changes and we have to guess how likely the being is to get it right. But as long as we have Mr./Ms. Perfect Predictor, then the nature or mechanism is unimportant. You can justify how it accomplishes this however you like (we don't have free will in this respect, or the alien can travel through time) but the point is, any cleverness or strategy or philosophizing you do has already been taken into account by the alien.

But things can be predicted in our world, including human behavior, and for some reason this doesn't seem to evince outcries about undermining the concept of free will. Like it or not, other humans predict things about you all the time that you think you'd have some conscious control over - whether you'll quit smoking, your credit score, your mortality - and across the population, these predictions are quite robust. They don't always have the individual exactitude that our alien friend does of course. But at the very least you must concede that if our alien friend is even as smart as humans, after playing this game multiple times with us, its ability to predict which box you take would be greater than random chance, and you would get some information about which box you should pick based on this. Being completely honest, I think a lot of the resistance to one-boxing comes from the repugnance with which some people regard the idea that their behavior is extremely predictable. (Hey! News flash: it is.) Nozick even offers additional information in his example by saying that you've seen friends and colleagues play the same game, and the being predicted their choice reliably each time. Come on Plato, do you want a million dollars or not? Absolute no-brainer!

The first objection (regarding self-referential decision-making) is slightly more fertile ground for argument and it's the one to which Nozick devotes the most time. The idea is that you're engaging in circular logic: I'm deciding to one-box, therefore the being knew I would one-box, therefore I should decide to one-box. (Again: what's the whole point of the exercise? That whatever decision you're about to make, the being knew you would do it, including all the mental gyrations you're going through to get to your answer.) Nozick gives the example of a person who doesn't know whether his father is Person A or Person B. Person A was a university scientist and died of a painful disease in mid-life which would certainly be passed onto all offspring; children of person A would be expected to display an aptitude for technical subjects. Person B was an athlete, and likewise his children would be expected to display an athletic character. So the troubled young man is deciding on a career, noting that he has excelled equally in both baseball and engineering. "I certainly wouldn't want to have a painful genetic disease. Therefore, I'll choose a career in baseball. Since I've chosen a career in baseball, that means my true prowess is in athletics and therefore, B was my father, and I won't get a genetic disease. Phew!"

Yes, that would be a ridiculous decision process. The difference between the two is this: the category the decider is in the whole time is defined in Newcomb as definitely affecting the decision, whereas in Nozick's parallel, it does not (he could've gone either way.) Whatever you decide in Newcomb, the alien knew you would go through your whole sequence of contortions, and you were in that category all the while. Whether such a deterministic category is meaningful is a different and probably more interesting question than Newcomb as-is. Here's another example: you're in a national park, following a marked trail. You get so far along the trail until you come to a frighteningly steep rock face with only a single cable hammered into it. You reason, "I am about to proceed up these cables. If I'm about to do it, it's only because my action was anticipated by the national park people who design the map and trails and they can predict my actions as a reasonably fit and sensible hiker, and furthermore they put these cables here; they're not in the business of encouraging people to do foolishly dangerous things. Therefore, because I am going to do it, it is safe and I should do it." (Any reader who's ever braved the cables on Half Dome in Yosemite by him or herself without knowing ahead of time what they were getting into has had this exact experience.) This replicates the decision process relating to the for-some-reason mysterious perfect predictor: "I am about to open Box #2 only. If I'm about to open it, the superintelligent being would have put a million dollars in it. Therefore I should open Box #2 only." In fact, all the time we go through such circular reasoning processes as they relate to other human beings who are predicting are actions either in general or specifically for us: I am going to do A, and A wouldn't be available unless other agents who can predict my actions reasonably well knew I would come along and do A, therefore I should do A. This still may be an epistemological mess (something I'm not going to debate here) but the fact is that we use this kind of reasoning constantly, living in a world shaped in the to-us most salient ways by other agents who can predict our actions.

Incidentally, I intentionally used the example of the national park because that we use that kind of reasoning becomes obvious when you're trying to decide whether to climb something or undertake an otherwise risky proposition in a wilderness area, rather than on developed trails with markers; you become acutely aware that this circular justification heuristic based on other agents predicting your actions is suddenly unavailable, and then when it's available again (five miles further on, you run across an old trail) the arrangement seems quite obvious.

As a final note, as in other games (like Prisoner's Dilemma) the payouts can be critically important to how we choose. As the problem is traditionally stated (always a thousand in box #1, either zero or a million in box #2), it actually makes the decision quite easy for us, even if we're worried about the fallibility of our brilliant alien benefactor (which again, if we are, then what's the point of this whole exercise!?!?). Making a decision that throws away a thousand for a crack at a million is not for most humans in Western democracies a bad deal. (If someone could show me a business plan that had a 50% chance of turning a thousand bucks into a million within the few minutes that the Newcomb problem could presumably take place in, I'd be stupid not to do it!) On the other hand if I lived in the developing world and made $50 a month and had six kids to feed, I might think harder about this. (This is the St. Petersburg lottery problem, in which the expected utility of the same payout differs between agents based on their own context, and can be applied to other problems as well.) Similarly if it were five hundred thousand in Box #1 and a million in Box #2, things would be more interesting, for my own expected utility at least. Opening a box expecting a million and getting nothing doesn't hurt so much if you would have only got a thousand by playing it safe and opening both; it would be pretty bad if you'd expected a million and got nothing but could still have half a million if you'd played it safe. (For me. Bill Gates would probably shrug.)

Overall, the whole exercise of Newcomb's Box, as given, seems to me uninteresting and obvious. But enough smart people have gone on debating it for long enough that I must be some kind of philistine who's missing something about it. Nonetheless the arguments I've seen so far are not compelling; feel free to share more.

Sunday, August 8, 2010

Wednesday, August 4, 2010

Hints That You're Living in a Simulation; Plus, What Is a Simulation?

See Bostrom's simulation argument for background. From a practical standpoint, you might be suspicious that you live in a simulation if you inhabit a world with the following characteristics:

Hint #1) Limited resolution. A simulation would be computation intensive. It would be useful to have tricks that increase the economy of operations, but in ways that do no compromise the consistency of the simulation to the players. One such trick would be to set an absolute upper limit to resolution (or a lower limit to the size of the elements that make up the "picture") that is below the sensory threshold of the players. These elements could variously be called pixels or quarks. Similarly, it would behoove the simulators to set a maximum time resolution, i.e. maximum frames-per-second, also called Planck times. Furthermore, the simulation's computing power is spared by a statistical method of calculating relationships between entities in the simulation (i.e quantum mechanics) even though it may look, at the scale of the game players or simulated entities, as if the universe maintained quantitative relationships in terms of integers calculated to arbitrary precision. (Related question: is it possible in principle given the physics of our universe for something the size of a bacterium or virus to "be conscious of" this gap in the behavior of the Newtonian and quantum realms, at a very basic sensory level? If not, isn't it interesting that our universe is such that there can be no consciousness operating on scales that would expose the twitching gears behind the scenes?)

Hint #2) There are limitations in what spaces within the game can be occupied by players or sims. In the old Atari 2600 Pole Position game, you couldn't just randomly go off driving off the track and through the crowd even if you didn't care about losing points; the game just wouldn't let you. Similarly, the total space in our apparent universe that we occupy, or directly interact with, or for that matter even get any significant amount of information from, is an infinitesimally small part of the whole. Unless you're in a submarine or in orbit, you don't go more than 200 meters below sea level or 13,000 m above it. (That's a volume of 2.1 x 10^18 m^3 that for all practical purposes the entirety of human history has occurred in; double that figure, and that's the volume that all of evolutionary history has occurred in.)

Hint #3) Beyond the "active game volume" as described above, dab a few pixels here and there in an otherwise almost entirely dark and empty volume. Make them so far away that sims can't possibly interact with them. Reveal additional detail as necessary whenever someone happens to look more closely at them. (And there's another trick: objects in this simulation are only loosely

defined until one of the players interacts with them, "collapsing the wave function". Yeah, that's what the programmers will call it, that's the ticket.)

Hint #4) Even in that limited location, make the active game volume wrap around. That way the simulators get rid of edge-distortion problems, as in Conway's Life. A sphere is the best way to do this. Therefore, work out the physics rules of the simulation to favor spheres.

Hint #5) Make each state of the simulation dependent on previous states of the simulation, but simplify by dramatically limiting the number of inputs with any causal weight. The simulators can limiting computations by having only mass, charge, space and their change over time determine subsequent frames.

Hint #6) If for some reason it is important for the entities in the simulation to remain ignorant to their existence as part of a simulation, the simulators could make sure the entities are accustomed to not only these kinds of stark informational discontinuities but to profound differences in the quality of awareness, both within themselves and each other. That is, the sims will accept not just that the vast majority of the universe (as seen in the sky at night) is interactively off-limits to them, but they'll also accept that their own awareness thereof and ability to connect the dots will dramatically vary over time. That way, if there is any need to interfere and make adjustments (to stop someone from figuring out the game) it won't strike the sims as strange. (Forgetfulness, deja vu, mental illness, drugs, varying intelligence or ability to concentrate on math, death of player-characters before they can learn too much?)

#6 does raise a very important question: why would the simulators give a damn if we knew we were in a simulation. So what? What would we do about it, sue them? If Pac-Man woke up and deduced that he were a video game character, if he still experienced suffering and mortality the same way, why would it matter? By this same view, there's an easy answer to whether we should behave differently if we're actually in a simulation: no. Whether our universe is in reality just World of Warcraft from the sixth dimension, if we simulated beings can suffer (and I know I can), then the moral rules are exactly the same as before.

It's also worth asking for some humility, and asking why we humans always assume that we would be the purpose of any such simulation. We could be merely incidental consciousnesses that are necessary for harboring the populations of simulated bacteria that the simulators are really studying. Or, the simulators could be cryonicists who preserve pets, and the most popular pets in their dimension look like what we call raccoons, and our universe is actually the raccoon heaven in which their beloved masked companions await a cure for the disease that forced the owners to put them on ice. In fact the raccoon-heaven simulation would contain a whole suite of ecosystem, all of them purely simulated (with the exception of raccoons) to keep up the appearance of a full biosphere. So the point of such a simulation would be to fool raccoons - or maybe even mice (again, why would they care about fooling everyone! If the simulators are reading this, just give me more juicy steaks and I won't make problems. It doesn't cost you anything!)

While the raccoon thought experiment is meant to be whimsical, a healthy respect for our own ignorance is always in order for these kinds of speculations. After all, assuming what we have guessed about the rest of (for the sake of argument simulated) universe is accurate, then there might be "aliens" (other non-human intelligences within the simulation) who may very well be much brighter than us. So even if the simulation is somehow arranged around the most intelligent entities within it (as we assume), those entities need not be human. Even if we're simulated, and we have a real brain and body in the "real" universe that's similar to our form in this one, this simulated universe might be designed for Martians (who are brighter than us) and be much less pleasant than our home dimension.

Finally, the very idea of a simulation is poorly defined. Mostly we think of something like almost completely controlled full-world simulation in the Matrix, but let's explore boundary cases. If I wear rose-colored glasses, is that a simulation (or a red world)? What about LSD that causes me to see unidentified animals scurrying past in my peripheral vision? What about DMT that causes a complete dissociation of external stimuli from subjective experience? What if I have some chip implanted that displays blueprints of machinery in my visual field a la the Terminator, is that a simulation? What about a chip that makes me see a tiger following me around that isn't there? (Hypothetical given current limitations.) What if I hear voices telling me to do things that are produced by tissue inside my own skull, by no conscious intent of anyone? (Not at all hypothetical.)

One of the interesting points in the popular movie Inception is the way that external stimuli appear in dreams. This gives us a hint as to what we mean by simulation, and why we care. Most of us have had experiences where the outside world "intruded" into a dream, with the stimulus obvious after we awoke. I once dreamed that a dimensional portal slid open in front of me with an ominous metallic resonance, and I stepped through it, suddenly speeding over the red, rocky surface of Mars. Then I realized it was my father opening his metal closet door in the next room, and I was looking into that room at the red-orange carpeting. Before I was fully awake I had received the sound stimulus but I had built a world out of it that most of us would not regard as real. (The experience of speeding over Mars was quite real, even if most humans would have a more accurate representation of that auditory stimulus.) So, a better way of saying "how do I know 'this' is reality, rather than another dream, or a simulation?" is to ask "how do I know I am perceiving "true" stimuli, without mapping them unnecessarily onto internal stimuli, giving me as accurate and un-contorted a view of the world as possible?"

And indeed in certain ways, we certainly are dreaming, in the sense of injecting internal stimuli and filtering external stimuli through them. (Notably, it is possible to view schizophrenics as people who experience dreams even while awake and filter their perceptions accordingly.) First and most obviously, because our sense organs are limited in what they can detect, we're obtaining only a slice of possible data. Second, the world we knit together is the result of binding of sensory attributes into object/events, as well as pattern recognition. The limitations of our nervous systems, and the associations we are able to make, profoundly influence the representation we build of the world we're perceiving.

Third, and most significantly, a large part of our experience is non-representational: emotions, pleasure and pain do not exist outside of nervous systems, or rather the events to which those experiences correspond are almost entirely contained within nervous systems. Yes, to be precise the experience of light does not exist until the triggering of a cascade of electrochemical events by radiation incident on pigments in retinal cells; but light, which is what is represented in our experience, exists traveling across the universe. Pain and happiness do not. These are internal stimuli that add a non-representational layer to reality, even more certainly than my dream of the Mars overflight.

A good working definition of a simulation as it is commonly understood is when the majority of one's external stimuli are supplied deliberately by another intelligence to produce experiences that do not correlate to physical reality external to the nervous system (or computational equivalent). This avoids taking a position on AI; the sims may or may not be entities separate from the computation. I.e., you might be in sensory deprivation tank like Neo, or you might be a computer program. The question of reality versus dreams or simulations is not one of discrete "levels" as we've come to think of it in popular culture. It is rather a question about how we know our experiences correspond in some consistent way with events separate from our nervous systems.

Hint #1) Limited resolution. A simulation would be computation intensive. It would be useful to have tricks that increase the economy of operations, but in ways that do no compromise the consistency of the simulation to the players. One such trick would be to set an absolute upper limit to resolution (or a lower limit to the size of the elements that make up the "picture") that is below the sensory threshold of the players. These elements could variously be called pixels or quarks. Similarly, it would behoove the simulators to set a maximum time resolution, i.e. maximum frames-per-second, also called Planck times. Furthermore, the simulation's computing power is spared by a statistical method of calculating relationships between entities in the simulation (i.e quantum mechanics) even though it may look, at the scale of the game players or simulated entities, as if the universe maintained quantitative relationships in terms of integers calculated to arbitrary precision. (Related question: is it possible in principle given the physics of our universe for something the size of a bacterium or virus to "be conscious of" this gap in the behavior of the Newtonian and quantum realms, at a very basic sensory level? If not, isn't it interesting that our universe is such that there can be no consciousness operating on scales that would expose the twitching gears behind the scenes?)

Hint #2) There are limitations in what spaces within the game can be occupied by players or sims. In the old Atari 2600 Pole Position game, you couldn't just randomly go off driving off the track and through the crowd even if you didn't care about losing points; the game just wouldn't let you. Similarly, the total space in our apparent universe that we occupy, or directly interact with, or for that matter even get any significant amount of information from, is an infinitesimally small part of the whole. Unless you're in a submarine or in orbit, you don't go more than 200 meters below sea level or 13,000 m above it. (That's a volume of 2.1 x 10^18 m^3 that for all practical purposes the entirety of human history has occurred in; double that figure, and that's the volume that all of evolutionary history has occurred in.)

Hint #3) Beyond the "active game volume" as described above, dab a few pixels here and there in an otherwise almost entirely dark and empty volume. Make them so far away that sims can't possibly interact with them. Reveal additional detail as necessary whenever someone happens to look more closely at them. (And there's another trick: objects in this simulation are only loosely

defined until one of the players interacts with them, "collapsing the wave function". Yeah, that's what the programmers will call it, that's the ticket.)

Hint #4) Even in that limited location, make the active game volume wrap around. That way the simulators get rid of edge-distortion problems, as in Conway's Life. A sphere is the best way to do this. Therefore, work out the physics rules of the simulation to favor spheres.

Hint #5) Make each state of the simulation dependent on previous states of the simulation, but simplify by dramatically limiting the number of inputs with any causal weight. The simulators can limiting computations by having only mass, charge, space and their change over time determine subsequent frames.

Hint #6) If for some reason it is important for the entities in the simulation to remain ignorant to their existence as part of a simulation, the simulators could make sure the entities are accustomed to not only these kinds of stark informational discontinuities but to profound differences in the quality of awareness, both within themselves and each other. That is, the sims will accept not just that the vast majority of the universe (as seen in the sky at night) is interactively off-limits to them, but they'll also accept that their own awareness thereof and ability to connect the dots will dramatically vary over time. That way, if there is any need to interfere and make adjustments (to stop someone from figuring out the game) it won't strike the sims as strange. (Forgetfulness, deja vu, mental illness, drugs, varying intelligence or ability to concentrate on math, death of player-characters before they can learn too much?)

#6 does raise a very important question: why would the simulators give a damn if we knew we were in a simulation. So what? What would we do about it, sue them? If Pac-Man woke up and deduced that he were a video game character, if he still experienced suffering and mortality the same way, why would it matter? By this same view, there's an easy answer to whether we should behave differently if we're actually in a simulation: no. Whether our universe is in reality just World of Warcraft from the sixth dimension, if we simulated beings can suffer (and I know I can), then the moral rules are exactly the same as before.

It's also worth asking for some humility, and asking why we humans always assume that we would be the purpose of any such simulation. We could be merely incidental consciousnesses that are necessary for harboring the populations of simulated bacteria that the simulators are really studying. Or, the simulators could be cryonicists who preserve pets, and the most popular pets in their dimension look like what we call raccoons, and our universe is actually the raccoon heaven in which their beloved masked companions await a cure for the disease that forced the owners to put them on ice. In fact the raccoon-heaven simulation would contain a whole suite of ecosystem, all of them purely simulated (with the exception of raccoons) to keep up the appearance of a full biosphere. So the point of such a simulation would be to fool raccoons - or maybe even mice (again, why would they care about fooling everyone! If the simulators are reading this, just give me more juicy steaks and I won't make problems. It doesn't cost you anything!)

While the raccoon thought experiment is meant to be whimsical, a healthy respect for our own ignorance is always in order for these kinds of speculations. After all, assuming what we have guessed about the rest of (for the sake of argument simulated) universe is accurate, then there might be "aliens" (other non-human intelligences within the simulation) who may very well be much brighter than us. So even if the simulation is somehow arranged around the most intelligent entities within it (as we assume), those entities need not be human. Even if we're simulated, and we have a real brain and body in the "real" universe that's similar to our form in this one, this simulated universe might be designed for Martians (who are brighter than us) and be much less pleasant than our home dimension.

Finally, the very idea of a simulation is poorly defined. Mostly we think of something like almost completely controlled full-world simulation in the Matrix, but let's explore boundary cases. If I wear rose-colored glasses, is that a simulation (or a red world)? What about LSD that causes me to see unidentified animals scurrying past in my peripheral vision? What about DMT that causes a complete dissociation of external stimuli from subjective experience? What if I have some chip implanted that displays blueprints of machinery in my visual field a la the Terminator, is that a simulation? What about a chip that makes me see a tiger following me around that isn't there? (Hypothetical given current limitations.) What if I hear voices telling me to do things that are produced by tissue inside my own skull, by no conscious intent of anyone? (Not at all hypothetical.)

One of the interesting points in the popular movie Inception is the way that external stimuli appear in dreams. This gives us a hint as to what we mean by simulation, and why we care. Most of us have had experiences where the outside world "intruded" into a dream, with the stimulus obvious after we awoke. I once dreamed that a dimensional portal slid open in front of me with an ominous metallic resonance, and I stepped through it, suddenly speeding over the red, rocky surface of Mars. Then I realized it was my father opening his metal closet door in the next room, and I was looking into that room at the red-orange carpeting. Before I was fully awake I had received the sound stimulus but I had built a world out of it that most of us would not regard as real. (The experience of speeding over Mars was quite real, even if most humans would have a more accurate representation of that auditory stimulus.) So, a better way of saying "how do I know 'this' is reality, rather than another dream, or a simulation?" is to ask "how do I know I am perceiving "true" stimuli, without mapping them unnecessarily onto internal stimuli, giving me as accurate and un-contorted a view of the world as possible?"

And indeed in certain ways, we certainly are dreaming, in the sense of injecting internal stimuli and filtering external stimuli through them. (Notably, it is possible to view schizophrenics as people who experience dreams even while awake and filter their perceptions accordingly.) First and most obviously, because our sense organs are limited in what they can detect, we're obtaining only a slice of possible data. Second, the world we knit together is the result of binding of sensory attributes into object/events, as well as pattern recognition. The limitations of our nervous systems, and the associations we are able to make, profoundly influence the representation we build of the world we're perceiving.

Third, and most significantly, a large part of our experience is non-representational: emotions, pleasure and pain do not exist outside of nervous systems, or rather the events to which those experiences correspond are almost entirely contained within nervous systems. Yes, to be precise the experience of light does not exist until the triggering of a cascade of electrochemical events by radiation incident on pigments in retinal cells; but light, which is what is represented in our experience, exists traveling across the universe. Pain and happiness do not. These are internal stimuli that add a non-representational layer to reality, even more certainly than my dream of the Mars overflight.

A good working definition of a simulation as it is commonly understood is when the majority of one's external stimuli are supplied deliberately by another intelligence to produce experiences that do not correlate to physical reality external to the nervous system (or computational equivalent). This avoids taking a position on AI; the sims may or may not be entities separate from the computation. I.e., you might be in sensory deprivation tank like Neo, or you might be a computer program. The question of reality versus dreams or simulations is not one of discrete "levels" as we've come to think of it in popular culture. It is rather a question about how we know our experiences correspond in some consistent way with events separate from our nervous systems.

Labels:

delusions,

dreams,

hallucination,

schizophrenia,

simulation

Monday, August 2, 2010

Looking for Neurological Differences Between Nouns and Verbs

Just ran across this poster presented at the Organizing for Brain Mapping's Annual Meeting in 2004. Sahin, Halgren, Ubert, Dale, Schomer, Wu and Pinker looked at the fMRI and EEG changes associated with a number of language tasks, and one of the questions they asked was whether activation characteristics were different for nouns and verbs. This study did not find that they were.

In my sketch of a neurolinguistic theory, verbs are first order modifiers and are distinct from adjectives in that they mediate properties and relationships between nouns. (In this sense, intransitive verbs are more similar to adjectives than to transitive verbs.) I also postulate that nouns and first order modifiers should have identifiably different neural correlates. I have not yet completed a literature search (obviously, if I'm citing posters from 2004.) However, even if such different neural correlates obtain, then I think it the task design here was not necessarily adequate to capture such differences, because the participants were asked to morphologically modify the nouns and verbs in isolation, rather than in situ, in grammatical relation to each other.

Another interesting experiment would be to give the participants nonsense words and new affixing rules (i.e. not revealing the part of speech of the nonsense word, i.e. ("if the word has a t in it, add -pex to the end, otherwise, add -peg"), and look for any difference relative to neural correlates of morphological tasks done in real words.

In my sketch of a neurolinguistic theory, verbs are first order modifiers and are distinct from adjectives in that they mediate properties and relationships between nouns. (In this sense, intransitive verbs are more similar to adjectives than to transitive verbs.) I also postulate that nouns and first order modifiers should have identifiably different neural correlates. I have not yet completed a literature search (obviously, if I'm citing posters from 2004.) However, even if such different neural correlates obtain, then I think it the task design here was not necessarily adequate to capture such differences, because the participants were asked to morphologically modify the nouns and verbs in isolation, rather than in situ, in grammatical relation to each other.

Another interesting experiment would be to give the participants nonsense words and new affixing rules (i.e. not revealing the part of speech of the nonsense word, i.e. ("if the word has a t in it, add -pex to the end, otherwise, add -peg"), and look for any difference relative to neural correlates of morphological tasks done in real words.

Strong AI, Weak AI, and Talmudic AI

Yale Computer scientist David Gelernter argues here that Judaic dialectic tradition will help us to reason our way through the moral morass of the first truly intelligent machine. I had first written this off as an article in the genre of "interesting collision of worldviews". But in the near future the cognitive science debates we're having today will seem luxuriously academic and unhurried, because for several reasons involving computing and neuroscience they will soon be more than intriguingly difficult questions. Even if we can all agree that suffering must be the basis of morality, we will need a way to know that, on that basis, it's not okay to disassemble someone in a coma, but it is okay to disassemble a machine that can argue for its own self-preservation.

Sunday, August 1, 2010

John Searle Must Be Spamming Me

Because with all the comment-spam, the waiting-to-be-moderated comments list looks like a Chinese chat-room. I have yet to see any Hindi. And anyway I'm sure the machine producing the spam doesn't understand the symbols.

Wednesday, July 28, 2010

Reflections on Wigner 1: Humility in Pattern Recognition - Mathematics as a Special Case

It's often asked how natural selection could have produced something like the mathematical ability of modern humans. Why can an ape, designed to mate, fight, hunt and run on a savanna, and perceive things that occur on a time scale of seconds to minutes and a size scale of a centimeter to a few hundred meters, even partly understand quarks and galaxies? Implicit in this statement is an admiration for that ability, and the power of mathematics, as well as an assumption held by physicists that should not be surprising.

The physicists' assumption is that the whole of nature, or at least the important parts of it, can be described by mathematics. In "The Unreasonable Effectiveness of Mathematics in the Natural Sciences", Wigner observes "Galileo's restriction of his observations to relatively heavy bodies was the most important step in this regard. Again, it is true that if there were no phenomena which are independent of all but a manageably small set of conditions, physics would be impossible." Another way of saying this is that those regular relationships in nature most easily recognizable by our nervous systems are those parts of nature which we are most likely to notice first; seasonal agriculture preceded gravitation for this reason. But there is a circular, self-selection issue here about the interesting correspondence between the empirical behavior of nature and the mathematical relationships humans are capable of understanding, which is that:

a) humans can understand math.

b) What we have most clearly and exactly understood of nature so far (physics) employs math

c) Therefore, math uniquely and accurate describes nature.

Point b may be true only because our limited pattern recognition ability (even including infinitely recursive propositional thinking like math within that term) only allows us to recognize a certain limited group of relationships among all possible relationships in nature. In other words, of course we've discovered physics because those relationships are the ones we can most easily recognize! It's as if someone with a ruler goes around measuring things, and at the end of the day looks at the data she's collected and is amazed that it was exactly the kind of data you can collect with a ruler.